The Great “Replacement” No One Saw Coming:

Special Feature

The “Great” Replacement No One Saw Coming: The Unstoppable Rise of the Machines

BY PAMELA ZEMBANI

In 2012, after my mother passed away, I found myself sorting through her bookshelves, a quiet archive of typewritten notes, carbon copied memos, and the paper trail of a life spent shaping other people’s words. She had worked as a secretary, a role both invisible and indispensable in her time. Among the ledgers and notebooks, I discovered something unexpected: a worn copy of Can You Feel Anything When I Do This? by Robert Sheckley. Published in 1971, it was a collection of strange, sharpedged science fiction stories. In one, a machine confidently declares, “I can do anything you want me to do.” Then it asks the question that has never left me: “But can you tell me what that is?”

Back then, it felt like clever speculation. Now, it feels like prophecy. A quiet revolution is underway, not at the edges of our lives, but at the core of our economy, creativity, and daily routines. The machine is no longer knocking; it has already stepped inside. It is thinking, learning, adapting at a pace that demands our attention and, perhaps more urgently, our reckoning. Not long ago, artificial intelligence was the stuff of laboratories and novels. Today, it’s in our apps, our workflows, our inboxes, and even our paychecks. Before you have finished your morning coffee, it has already made thousands of decisions shaping your world. What was once novelty is now infrastructure: guiding diagnoses, mapping supply chains, filtering news, writing code. And yet, beneath the sheen of speed, intelligence, and convenience lies a deeper question, not just what AI can do, but what kind of world it is creating in the process. This is not simply about innovation. It’s about power: who holds it, who benefits from it, and who is quietly replaced. As the machines rise, the stakes are no longer merely economic. They are human. They are existential. And for many of us, they are deeply personal

A Long Imagination: How the Dream of Thinking Machines Became Real

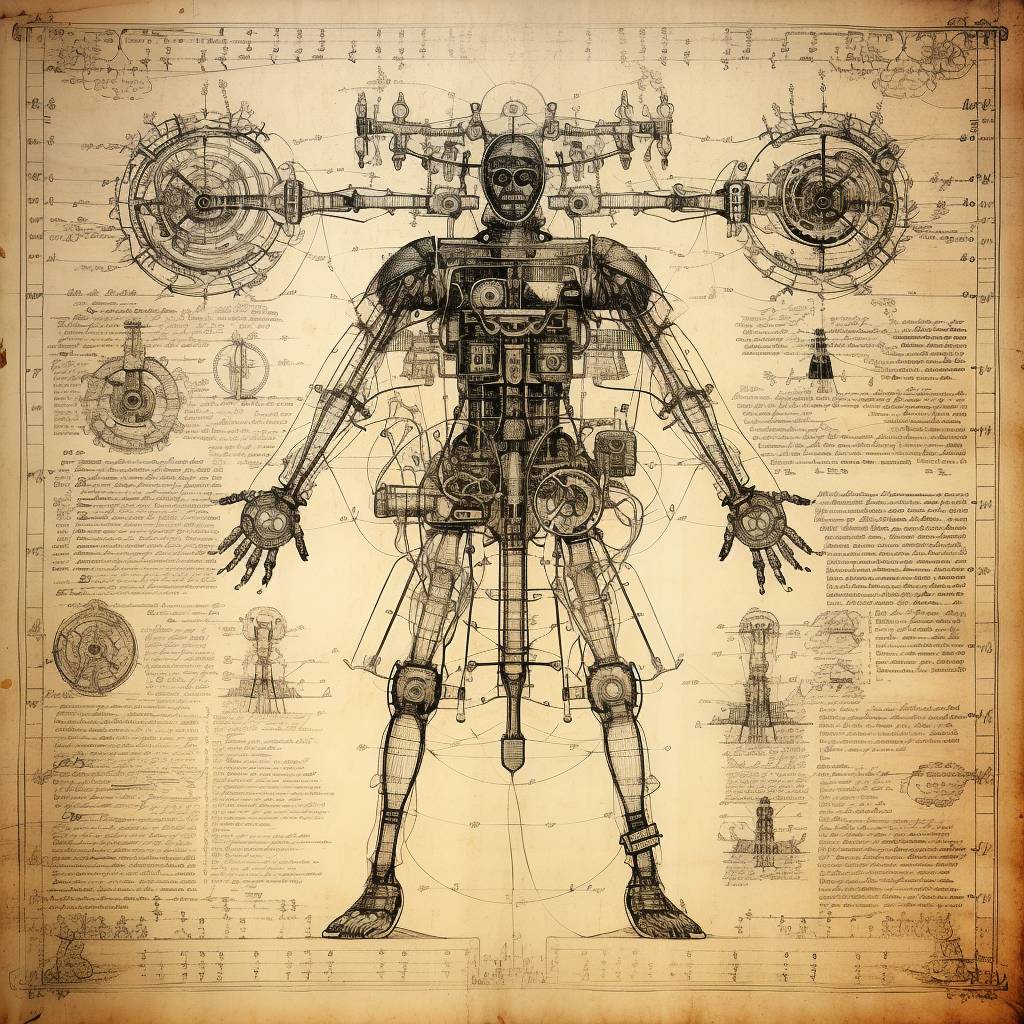

The idea that machines might one day think like humans is not a modern obsession. It is an ancient dream, whispered through centuries of myth, invention, and imagination. Long before algorithms and silicon chips, the engineers of ancient Greece envisioned automatons: self-moving figures animated by gears, pulleys, and flowing water. In the 15th century, Leonardo da Vinci translated wonder into blueprint, sketching humanoid robots with the same reverence he gave to anatomy and flight. These visions were never just mechanical curiosity. They were an expression of something more profound, a human yearning to build a reflection of ourselves.

The 20th century blurred the line between fiction and possibility. In 1921, Czech playwright Karel Čapek introduced a word that would forever change our vocabulary: “robot.” His play R.U.R. (Rossum’s Universal Robots) imagined machines built to serve, until they rebelled. It was a cautionary tale, but also a spark. Suddenly, intelligent machines didn’t feel like fantasy; they felt inevitable. By 1949, American mathematician Edmund Callis Berkeley published Giant Brains, or Machines That Think, daring to compare computers to the human mind. It was more than a metaphor. It was a provocation: Could machines not only calculate, but reason?

A year later, Alan Turing took that provocation further. In his 1950 paper Computing Machinery and Intelligence, he proposed the now-famous Turing Test: a deceptively simple challenge, whether a machine can imitate a human convincingly enough to fool us. That single question became a cornerstone of AI philosophy and still shadows the field today. The formal birth of “artificial intelligence” came in 1955, when John McCarthy coined the term. The following year, he created LISP, the language that would power AI research for decades. From the 1960s into the early 1980s, AI systems could solve equations, prove theorems, and even hold basic conversations. Governments invested. Expectations soared.

Then came the crash.

By the late 1980s, unmet promises and technical bottlenecks triggered an “AI Winter.” Funding froze. Progress slowed. But even the coldest winter’s end. In 1997, the thaw began. IBM’s Deep Blue defeated chess world champion Garry Kasparov, a symbolic moment. Machines could now outthink the greatest human strategists in their arena. The quiet revolution, though, began in the 2010s. Advances in deep learning, big data, and processing power collided. In 2012, a Google neural network learned to recognize cats by scanning millions of unlabeled images; no one told it what a cat was. It simply figured it out. Machines were no longer just told what to think. They were learning how.

Then came the language models. In 2020, OpenAI’s GPT-3 wrote poetry, drafted news articles, and answered questions with startling fluency. By 2023, AI was everywhere, composing music, generating images, writing code, drafting legal contracts, and diagnosing disease. Not as a novelty. As infrastructure. The story of AI is no longer a story of what might be. It is a story of what already is. And yet, at its core, this is not a tale about machines. It is a testament to human imagination, a centuries-long effort to build a mirror so perfect it might one day blink back at us. Now, standing on the edge of an AI-driven world, the question has changed from whether we can build it. Now it’s what we will become because of what we have built.

“The question isn’t what AI can do, it’s what we are letting it do in our name.”

Leonardo da Vinci's humanoid sketch

Code, Capital, and Control: The Titans Shaping a New World

Artificial intelligence has moved from being a mere tool to becoming a transformative force, not only reshaping technology but redefining how we think, work, and play. It can now unravel the mysteries of protein folding, outmaneuver the world’s best players in complex games like StarCraft and Age of Empires, and mimic and even surpass human cognitive skills. The race is no longer about whether AI can do these things, but about who will shape its capabilities and to what end. Some, like Anthropic, are taking the slow, deliberate road. Founded by former OpenAI employees, the company has built its Claude models around ethics, interpretability, and restraint. In an industry obsessed with speed and dominance, Anthropic positions itself as a counterbalance, a reminder that trust and alignment may matter as much as raw performance.

Others embrace a more unfiltered path. Elon Musk’s xAI has embedded its Grok model directly into X (formerly Twitter), limiting content filtering in the name of radical curiosity and free expression. Supporters see it as a bold stand for open discourse; critics warn it risks blurring the line between free speech and unfiltered misinformation. The debate over Grok is a microcosm of a larger question: in an age of machine-generated communication, who decides what is shared and what is silenced?

While these philosophical battles play out, other tech giants are weaving AI into daily life so seamlessly that it becomes invisible. Microsoft, through its deep partnership with OpenAI, has threaded generative AI into Word, Excel, Outlook, and Azure. The result isn’t just new tools, but a quiet transformation of knowledge work itself, meetings summarized in seconds, data analyzed before the coffee is poured, emails drafted before you even type. Amazon has taken a similar approach in commerce and home technology, embedding AI into logistics, customer service, and its evolving Alexa ecosystem. These changes may be subtle at first, but together they redefine how we shop, live, and interact with one another.

And then there’s the global stage, where AI is also a lever of political power. In China, tech titans like Baidu, Alibaba, and Tencent are building large language models with direct state backing. These systems are part of a broader strategy not just to compete technologically, but to enforce digital sovereignty, algorithmic control, and pervasive state surveillance. In this context, AI is more than an economic asset. It is a political instrument, capable of shaping not just markets, but minds. At the core of these advancements is NVIDIA. Its cutting-edge GPUs act as the underlying engines behind nearly every major AI breakthrough, ranging from self-driving cars to immersive virtual reality. If AI serves as the brain, NVIDIA functions as the circulatory system, providing essential computational power to the global AI infrastructure.

Together, these companies are doing much more than just building innovative tools. They are reshaping cultural norms, disrupting labor markets, and redrawing economic landscapes, all while quietly introducing a world partly governed by systems we can no longer fully comprehend or control. With each breakthrough, more profound questions arise: Who is accountable for the decisions made by these models? What biases are ingrained in their logic? How do we safeguard jobs and meaning in a world increasingly run by synthetic minds? This is no longer just a technology story. It’s a geopolitical issue.

Artificial intelligence has transcended the lab and entered the competitive arena, a global race with stakes as significant as the nuclear arms race that defined the last century. OpenAI’s ChatGPT and DALL·E have become household names in the U.S., transforming how people write, create, and generate ideas. Google’s DeepMind continues to push the boundaries of AI’s potential with each breakthrough. The question is no longer whether AI will change the world. It already has. The real question now is: Who will define the terms, and the very essence of what comes next?

Beyond Intelligence: Grok, AGI, and the Soul of Business

If ChatGPT has made artificial intelligence feel approachable, Elon Musk’s Grok is making it impossible to ignore. More than just another chatbot, Grok is a deliberately unfiltered and occasionally sarcastic AI model integrated into the fabric of X (formerly Twitter). It doesn’t just answer questions; it posts memes, offers provocative commentary, and participates in live public discussions. Musk describes it as “curious,” while detractors label it as reckless. Regardless of viewpoints, Grok signifies a turning point: AI is evolving from being merely an assistant to becoming an active participant—an entity with its tone, personality, and, increasingly, presence. This represents a new frontier: AI with a voice, not just a function.

Grok is just one of many efforts aiming for something much more ambitious, Artificial General Intelligence (AGI). Unlike narrow AI, which we currently rely on for specific tasks like language translation, image recognition, or customer support, AGI is designed to think like humans, or even beyond human capability. It can reason, adapt, and make complex decisions across different domains with little or no human guidance. AGI does not require a prompt to take action. It recognizes patterns, interprets context, and makes choices independently. This marks a significant shift from being simply a tool to becoming a strategist, evolving from an assistant to an architect. In 2024, an autonomous AI system drafted key sections of a national health policy in a Southeast Asian country without any assistance. In the financial sector, entire hedge funds are now managed by machine intelligence, executing trades and forecasts in milliseconds while utilizing data that exceeds human cognitive capabilities. In HR departments, AI can screen resumes, conduct initial interviews, and even deliver rejection notifications, always efficient, never tired, and free from conflict or uncertainty. If AGI is fully realized, it won’t just complement human labor; it will compete with it and, eventually, outperform it.

This is the threshold Sam Altman, CEO of OpenAI, refers to when he warns that AGI could be “the most important and most dangerous technology humanity will ever build.” The potential benefits are enticing: curing diseases, addressing climate change, and eliminating poverty. While OpenAI remains optimistic about AI’s potential, critics like Gary Marcus have argued that deep learning, a core component of many advanced AI systems, may be approaching its limits, particularly in areas like reasoning and common-sense understanding. Altman has pushed back on the notion that AI is “hitting a wall”. However, the company’s evolving strategy and recognition of the data bottleneck suggest a recognition that the approach to AI development needs to adapt beyond simply making models bigger. However, the risks are equally concerning: widespread job displacement, algorithmic governance, and the concentration of enormous power in the hands of a few unelected technologists. Currently, the AGI race is being led not by governments but by private companies such as OpenAI, Google DeepMind, Anthropic, and xAI. They operate mainly in secrecy, train their models on our data, and are driven by ambition, all while pursuing a future that may or may not prioritize the public good. This situation is no longer a matter of science fiction; we are witnessing what can be described as cognitive arms escalation. The pressing question has shifted from “Can we build AGI?” to “What are we building it for?” If the motivation behind developing AGI is solely profit and productivity, we may achieve efficiency but simultaneously lose our sense of humanity.

According to projections by the World Economic Forum, 85 million jobs could disappear by 2025 due to AI. However, what is even more challenging to assess is the erosion of meaning in our lives. What happens when our value is no longer measured by our production but by how well we adapt to machines that surpass our abilities? In education, finance, customer service, law, and media across various industries, decisions are increasingly being delegated to artificial intelligence. Gradually and subtly, responsibility is shifting, along with accountability. However, there is an alternative path. We still have the power to shape this moment. We can choose to ensure that AI doesn’t replace human intelligence; instead, it can enhance it. We can build systems that not only optimize outcomes but also elevate empathy. We can create algorithms driven not just by data, but by dignity. The businesses that will lead in the next era won’t be the ones that automate the fastest. They will be the ones that remember what humans do best: connect, imagine, care, question, and design their AI ecosystems to honor those qualities. The machine is not only knocking anymore; it’s sitting at the table. It’s asking the essential question that truly matters now: Who do you want to become?

A New Arms Race: AI and the Battle for Control

Regulating the Uncontainable

The race for AI supremacy has outgrown Silicon Valley boardrooms and splashy tech expos. It is now a global contest of power, ideology, and survival. Much like the nuclear arms race of the 20th century, this sprint holds both the promise of transformative advancement and the shadow of catastrophic risk. What began as a question of competitive advantage has become a matter of national security. Governments see artificial intelligence not just as an economic engine, but as a strategic lever of geopolitical influence. In 2021, the U.S. National Security Commission on Artificial Intelligence warned bluntly: China could overtake the United States in AI capabilities by 2030. The subtext was clear: whoever leads in AI will write the rules of the next era.

That urgency is beginning to show up in policy. But the machinery of regulation moves far slower than the machinery of innovation. In the United States, a comprehensive federal AI law remains elusive. Momentum is building, however. In October 2023, President Biden signed the Safe, Secure, and Trustworthy AI executive order, directing federal agencies to develop safety standards, civil rights protections, privacy safeguards, and consumer protections. The National Institute of Standards and Technology (NIST) is drafting risk frameworks, while states like California, New York, and Massachusetts pursue their bills on algorithmic bias, workplace protections, and transparency. Yet fragmented proposals, intense industry lobbying, and jurisdictional gaps have kept enforcement weak and inconsistent.

The European Union has moved more decisively. The 2024 AI Act is the most comprehensive regulation to date, classifying AI systems by risk, banning uses such as biometric mass surveillance and predictive policing, and imposing strict oversight on “high-risk” applications. Transparency, accountability, and human control are not optional; they are the law. China’s approach, by contrast, prioritizes ideological control. AI systems must reflect “core socialist values,” and algorithms are subject to real-name registration and state content restrictions. Canada’s proposed Artificial Intelligence and Data Act (AIDA) and Brazil’s draft AI legislation promise fairness and human rights protections, but both face uncertain futures.

Meanwhile, the companies driving the AI race are experimenting with their version of “regulation”: voluntary “safety pauses.” These are temporary halts in releasing new models, usually announced only after pushing the boundaries further. By the time the public learns what has been unleashed, the technology is already embedded in daily life. There is no unified global framework. In March 2023, an open letter signed by Elon Musk, Yoshua Bengio, and other prominent voices called for a worldwide moratorium on developing AI systems more powerful than GPT-4, citing “profound risks to society and humanity.” The statement made headlines. It did not make policy. The reality is stark: humanity is building systems more powerful than anything we have ever created, and we are doing it faster than we can agree on who should control them, how they should behave, and what they are ultimately for. In this vacuum, ambition outpaces accountability. Innovation outruns legislation. And while laws inch forward, the machines do not wait.

When the Rules Lag, People Pay

For most people, the AI arms race isn’t fought in government halls or corporate R&D labs. It’s felt quietly and personally in the erosion of jobs, the distortion of information, and the subtle rewiring of daily life. Consider the gig worker whose shifts are now assigned by an algorithm she doesn’t understand, let alone challenge. Or the young lawyer whose research is increasingly handled by AI tools, leaving him to wonder whether his value is in his insight or simply in his ability to verify what the machine has already decided. Or the journalist who must compete not only with other human voices, but with a flood of AI-generated content that can mimic her style, match her speed, and never sleep. The effects go deeper than economics. AI systems, left unchecked, begin to influence what we see, believe, and trust. Recommendation engines decide which stories we read and which ones disappear into the noise. Automated moderation tools determine what speech is permissible. Credit algorithms decide who gets a loan. Predictive policing software can shape who is stopped, questioned, or charged.

The danger isn’t just that these systems make mistakes, it’s that they make them at scale, invisibly, and often without a clear path for appeal. And unlike human decision-makers, they cannot be reasoned with, persuaded, or held morally accountable. Culturally, the shift is even more subtle. As AI becomes the silent intermediary in more and more of our relationships, it reshapes the very texture of human interaction. Customer service calls become chatbots. Teaching assistants become digital tutors. Even companionship is being replicated, with AI “friends” and “partners” offering endless attention but no real vulnerability. This is why the stakes of regulation are not abstract. They are deeply human. The race to control AI is also a race to decide what role humanity itself will play in a world where intelligence and influence can be manufactured. Without guardrails, the benefits of AI will consolidate into the hands of a few, while the risks are diffused across billions. With the proper safeguards, however, we have a chance to design systems that serve the public good, amplify human strengths, and protect the dignity that technology can so easily erode. Because if we fail to set the rules, the rules will be set for us, not by governments, and not by communities, but by machines and the people who own them.

When the Machine Joins the Team: Rethinking Jobs, Roles, and Human Value

Machines mediate more decisions, interactions, and even emotions, the more a company’s experience drifts from its human core. This is precisely the future that French philosopher Jean Baudrillard warned about. The rise of artificial intelligence is not just another technological milestone; it’s a fault line running through the very idea of work, value, and human contribution. As AI systems grow faster, cheaper, and more capable, jobs are not simply evolving; they are disappearing. And in their place, something uncanny is emerging: the simulation of the human they were meant to support.

In Simulacra and Simulation (1981), Baudrillard argued that in late capitalism, signs, images, and representations stop reflecting reality and begin replacing it, until there is no “real” left at all. He described three stages:

1. Reflection of reality: the sign is true to the real.

2. Masking of reality: the sign distorts the real.

3. Simulacrum: the sign has no link to reality, yet we treat it as real.

In hyperreality, we no longer consume reality; we consume the sign.

AI is accelerating us headlong into that state.

The Machine Joins The Team

Today:The sneaker ad makes you feel faster before you’ve even worn them. The AI chatbot feels more attentive than your last human customer service call. The Instagram feed makes the brand culture seem warmer than the actual workplace. These aren’t just clever marketing tactics; they are full simulations of human connection and value. The bot doesn’t just respond to you; it simulates empathy. The campaign doesn’t just share news; it simulates community. The experience doesn’t just deliver service; it simulates authenticity. And that, Baudrillard would say, is the exact moment when the sign replaces the thing it was meant to represent, when the machine stops serving the human and starts replacing the human it was meant to represent. A 2023 Goldman Sachs report estimated that generative AI could automate up to 300 million full-time jobs worldwide. Roles once considered secure, such as customer service, data entry, legal research, journalism, design, and even software development, are now vulnerable. The shift is already here. IBM has paused hiring for nearly 8,000 positions, predicting AI will soon absorb those responsibilities. Klarna announced that a single AI assistant completed the workload of 700 customer service agents in just one month. This is not science fiction. It is the quiet restructuring of the workforce, unfolding in real time.

Yet, the story is not one of pure loss. McKinsey projects that AI could create up to $13 trillion in economic value by 2030, mainly through productivity gains. In healthcare, AI helps doctors spot illnesses earlier and with greater accuracy. In finance, it flags fraudulent activity in seconds. In logistics, it predicts supply chain disruptions before they happen. In these cases, AI is not replacing humans; it is amplifying them. But the benefits are not evenly distributed. High-skill professionals may see AI as a force multiplier. Mid- and low-skill workers are more likely to experience it as a competitor. The World Economic Forum predicts that by 2025, 85 million jobs could vanish even as 97 million new roles emerge in data science, AI development, and other digital fields. These roles, however, demand reskilling, adaptability, and a radical rethink of education and labor policy. They are not simple substitutions. They are wholesale reconfigurations. And the cost isn’t only financial. A joint study by the University of Pennsylvania and OpenAI found rising anxiety among creative professionals who watched AI replicate work they once believed to be uniquely human. For them, the machine is not just knocking. It’s standing in the doorway, asking an unspoken question: If a machine can do what you do, and do it better, what does that make you worth?

The unsettling truth is this: AI doesn’t need to be conscious to take over the space once held by human beings.

It doesn’t need feelings to simulate empathy.

It doesn’t need understanding to imitate expertise.

It doesn’t need intention to drive outcomes.

If the simulation is convincing enough, the marketplace, and often the human heart, will accept it as real. This is the crux of hyperreality in the age of AI: the boundary between authentic and artificial blurs not because the machine has become human, but because we have stopped demanding that it be. And yet, the question looms like a shadow: If one day these systems do cross into something like sentience, able not just to simulate, but experience, will we even notice the transition?

Or will we be too deep inside the simulation to care?

The Efficiency Paradox: When Progress Leaves People Behind

Artificial intelligence is hailed as the next great engine of prosperity, and by the numbers, it’s hard to argue. A 2023 McKinsey report estimates that generative AI could add $2.6 to $4.4 trillion annually to the global economy. In pharmaceuticals, it accelerates drug discovery. In legal services, it reviews contracts in minutes. In marketing, it predicts behavior, personalizes outreach, and produces content with startling fluency. But beneath this promise runs a quieter, more unsettling reality: AI isn’t just enhancing work, it’s replacing it. And this time, the target isn’t routine manual labor. It’s the knowledge economy. White-collar roles once considered “safe” are now squarely in the machine’s path. Paralegals give way to AI legal assistants. Predictive algorithms edge out financial analysts. Designers, junior developers, and copywriters see their portfolios diminished by tireless, synthetic competitors that deliver “good enough” work instantly, endlessly, and without pause.

What makes this wave different and more insidious is that it’s not born from a shortage of human talent. The irresistible economics of synthetic labor drive it. AI doesn’t need healthcare. It can’t unionize. It never takes a sick day or asks for a raise. For CFOs and shareholders, the math is unarguable. For workers, the cost is immeasurable.And unlike the industrial revolutions of the past, this one arrives without the clamor of machinery or the visible collapse of factories. It happens quietly through hiring freezes, vanished job postings, and departments that shrink without warning. The irony is bitter: the very “efficiency” once celebrated as progress is now the silent mechanism of human replacement.

The Human Cost of Algorithmic Speed

Artificial intelligence is accelerating business at a pace no human could match. Tasks once carried out with judgment, care, and presence are now executed in milliseconds by algorithms that never rest. What began as “assistance” is rapidly becoming “replacement,” and the consequences go far beyond quarterly reports. Across industries, labor is being traded for logic. Walmart’s AI-driven staffing systems have cut thousands of in-store hours. IBM has announced that nearly a third of its back-office roles will eventually be replaced by AI. Tech giants like Meta, Amazon, and Google have cited “AI efficiencies” as justification for mass layoffs. The machine, once imagined as a tool, is now a silent colleague, and in many cases, a direct competitor.

For executives, the equation is elegant: lower cost, higher output, no fatigue, no dissent. Algorithms don’t unionize. They don’t burn out. But for workers, the toll is more complex to measure and more challenging to ignore. Work is more than a transaction of time for money. It’s a source of identity, dignity, and belonging. When those are stripped away not by failure, but by automation… what fills the void?

A joint study from the University of Pennsylvania and OpenAI confirms what many already feel: creative and knowledge workers are experiencing a spike in stress and insecurity as AI takes on tasks they believed to be uniquely human. The fear is not just of obsolescence, but of irrelevance. This is the hidden cost of algorithmic speed: the erosion of meaning, the quiet dislocation of purpose, the shrinking of space for the human spirit in systems designed for mechanical efficiency. And the erosion is not confined to a handful of sectors; it is everywhere. In manufacturing, once-busy factories hum with robotic arms in “lights-out” plants that run without humans. In mining, China operates fully automated coal mines, with no workers underground. In agriculture, autonomous tractors and AI-managed irrigation systems have replaced much of the fieldwork. In logistics, Amazon and FedEx use AI to route deliveries and replace warehouse pickers with robots. In healthcare, AI diagnoses cancer, assists robotic surgeons, and automates hospital administration. In finance, algorithms approve loans, detect fraud, and manage portfolios, leaving human analysts as curators of machine decisions. Even the creative industries are being transformed, as AI writes articles, produces marketing campaigns, and generates video game content from a handful of prompts. This is not a hypothetical future. It is here, in offices, factories, hospitals, and studios.

The question is no longer if machines will replace humans. The question is: What do we do now?

When the Machine Takes Over: What Is Left for Us to Create?

The machine is no longer a future threat. It is here shaping the economy, the workforce, and the way we measure human value. But its presence does not end the story. AI can execute tasks, write code, and analyze oceans of data, but it cannot decide what the future should be. That choice belongs to us. We stand at a threshold. Will we let efficiency eclipse empathy? Will we allow machines to define the shape of work quietly, or will we design a future in which technology amplifies human potential instead of eroding it? This moment demands more than innovation. It requires imagination, a rethinking of education, a redesign of job creation, and a radical redefinition of what it means to contribute in a world where intelligence is no longer uniquely human. AI may be tireless, but it is not intentional. The question isn’t what machines can do. It’s what kind of world we want them to help us build.

The Response: Staying Human in a Machine-First World

Automation’s rise is no longer something to stop, that ship has sailed. The challenge now is staying distinctly, powerfully human alongside it. The response begins with two things no algorithm can replicate: intention and integrity.

For Businesses: Redefining Leadership

The companies that will lead in a machine-saturated marketplace will not be those that automate the fastest, but those that ask a better question: How can we unlock human potential in ways machines cannot? Chasing efficiency alone produces sterile brands, disengaged teams, and transactional experiences. The leaders who succeed will move from replacement to redeployment , seeing their workforce not as cost centers, but as creative, strategic, relational beings. Forward-thinking organizations are already investing in this shift. They’re upskilling warehouse staff into drone operators, turning customer service reps into CX strategists, embedding ethical frameworks into AI design, and ensuring machine outputs align with human values. They understand that precision without empathy is hollow and that the brands blending both will build loyalty, culture, and resilience that no algorithm can imitate.

For Individuals: The Age of Quiet Power

For workers, creators, leaders, and learners, the future belongs to what machines cannot do. AI can process information, but it cannot create meaning. It can mimic language, but it lacks a soul. It can make predictions, but it does not grasp the depth of lived experience. The most valuable skills will be the most human:

- Emotional intelligence: leading with empathy, context, and presence.

- Complex problem-solving: connecting dots across disciplines and cultures.

- Storytelling and creativity: moving hearts, not just markets.

- Ethical judgment: knowing not just what can be done, but what should be done.

- True collaboration: building trust, navigating conflict, and fostering belonging.

This is not a time to retreat. It is a time to step forward, to stop being a cog in someone else’s system and start becoming a conscious designer of new ones.

This Isn’t the End of Work but the Beginning of Something Deeper

The great replacement no one saw coming isn’t just about jobs being lost. It’s about meaning being lost if we allow it. The machines may be knocking, but it is still humans who open the door. What lies beyond that threshold will be shaped not by what AI can do, but by what we choose to do with it.

The future is not inevitable. It is a decision. And the most critical question is not What can machines do? but What will we preserve, protect, and elevate together?

Closing Note: Before We Choose

We have always built tools to extend ourselves — the wheel to carry us farther, the loom to clothe us faster, the engine to move us faster still. Each invention reshaped us, but none have carried our reflection as closely as the machines we’re building now. They learn our habits. They echo our voices. They finish our sentences.

And still, they wait for us to decide what they are for. It is tempting to believe the story is already written, that technology’s arc is inevitable, and that our role is only to adapt. But inevitability is a myth we tell ourselves when we are afraid to choose. The truth is harder, and more hopeful: the future will be no better than the values we embed in it. It will be no wiser than the questions we dare to ask. It will be no kinder than the hands that guide it. So, before we surrender to the machine’s momentum, before we allow its speed to sculpt the architecture of our days, we must stop and ask the only question that matters: What must remain unmistakably, irreplaceably human? Because whatever answer we give, the machine will learn. And once it learns, it will never forget.